Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Convolutional Neural Networks are the leading architecture in Deep Learning that are used for Image Processing Techniques.

OK, But Why should you care?

If the above line didn’t get you interested, How does a billion dollar sound?

That was the amount that Facebook paid to acquire Instagram a bunch of years ago. Today the company has grown exponentially to almost every smartphone and even you probably frequent it more than medium.

Enter CNN

Convolutional Neural Networks are a category of Neural Networks, which the long bearded geek researchers find to be more promising when working on Image data.

These work on images in a manner similar to the human brain: by finding smaller details- A line, a rectangle, a blob-and then working their way up to more abstract features- Lines arranged in a manner form an oval shape- A certain form of oval with some features is a face.

The basic idea behind them is the mathematical function ‘Convolution’.

Now if you like me slept through your Math lectures, all you need to know is that Images are basically represented as Multi-dimensional vectors. A 2-D layout makes sense, but the more dimensions (which is unintuitive) are present to mark the intensity of colours in the image (Remember the cool RGB pallete in MS-Paint?)

Convolution is a set of Dot Products performed by a filter (A mathematical filter like your regular snapchat filter, transforms the data into cooler,more desirable forms).

So we take our Image-which is a huge matrix, Convolve it with a filter-a much smaller matrix, and get an activation map.

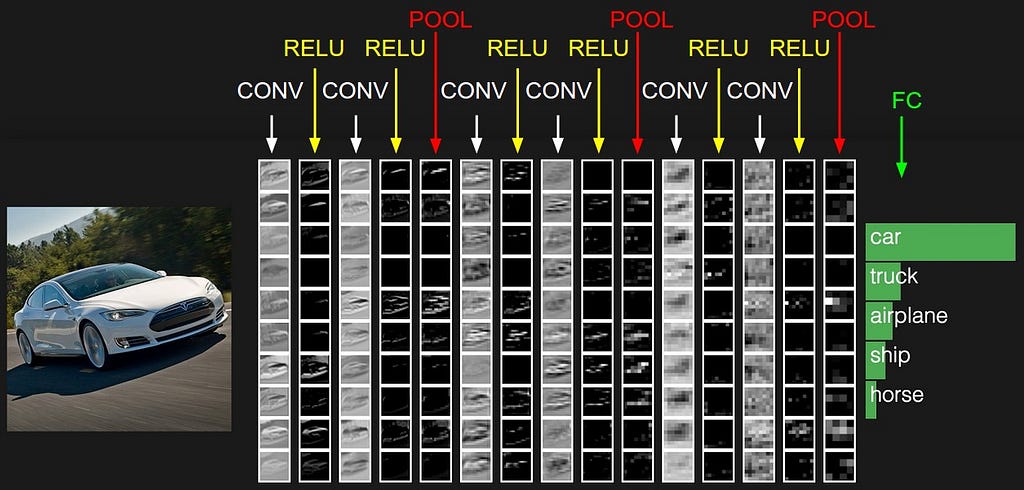

Activation map: Each filter is sensitive to certain data, it gets excited when it sees a certain shape/color/form. These are represented as activation maps of the orignal images which are the layers marked as Conv in the image. These are then fed through an activation function then to another bunch of filters which then get ‘excited’ by further more abstract features.

As we ‘go deeper’ into our Neural Networks, we go higher in terms of Human perception, features. We start by detecting features in pixel format and then detecting shapes, then finally objects.

If you notice closely, Every Conv Layer gets more recognizable. From Pixels to Cars. The first few layers detect something that makes no sense to me. The last layer shows resemblance to our end results.

These outputs are finally ‘pooled’ or sent into a fully Connected layer which is used to identify our object from a bunch of options.

Why CNN?

Well if you would have noticed, the Neurons of every layer are not fully connected so that means less complications, less number of weights and faster training.

Another cool thing is that since each layer almost finds a certain set of features in the previous layer. So a trained Network can be used for ‘transfer learning’. Where you use a trained network- Say one that is good at detecting cars. And then improvise it to differentiate between say Sedans and SUVs.

You could create a model based on a certain architecture of CNN- say a ResNet34 Model and then use it for identifying dogs and Cats! (Don’t worry the image below is not supposed to make sense. It ‘s there to makes this essay look more professional)

So we could effectively do what most engineers do- create a model that is inspired(copied) from another’s Neural network.

You could use an already award winning Architecture like the VGGNet, AlexNet and leverage their awesomeness in your personal venture.

What can CNNs do?

Food for thought: You could create an awesome engine that detects objects in images in real time and finds ‘A blue car by the beach’. Spoiler alter: My Mentor Jeremy Howard has already showcased this in his amazing Ted Talk.

Don’t worry, approximately 85% of Internet is Pixel Data and we have no techniques to properly analyse it.

It might take more than an entire life to binge watch it. Even when you say that Instagram with its revolutionary algorithms shows you posts related to your interests, behind the scenses its using your Hastags and Similar user trends to show the data and not real time Image/Video Analysis to conclude that and so does Google. The google Image results are simply a result of finding the text in their website.

Even these giants are yet to devolop their true Image Analysing systems.

This could be your shot at developing something. A better Self Driving Car? The new Pinterest?

You can find me on twitter @bhutanisanyam1Subscribe to my Newsletter for a Weekly curated list of Deep learning, Computer Vision ArticlesHere and Here are two articles on my Learning Path to Self Driving Cars

Convolutional Neural Network in 5 minutes was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.